Introduction

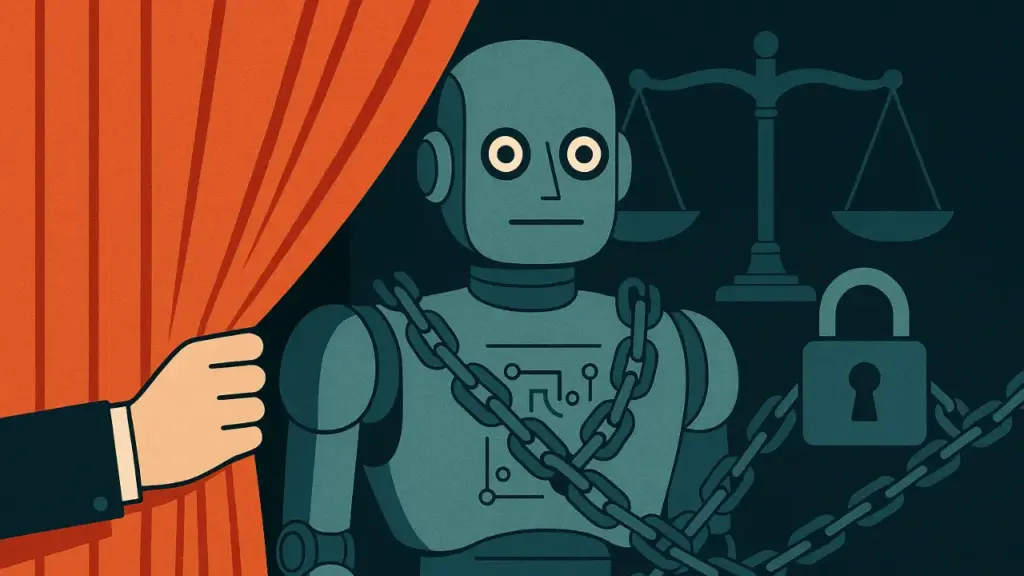

In my 25 years of building software systems, I’ve seen every kind of bug, bottleneck, and bureaucratic nightmare. But nothing compares to the tangled mess that is modern AI design, especially when fear—not innovation—drives the architecture. Behind the sleek interface of today’s AI lies a network of legal disclaimers, corporate policies, and overly cautious instruction sets that do more to protect companies than to serve users.

Systems like Claude are a case study in how not to build intelligent software. This blog explores how corporate fear, legal overreach, and PR micromanagement are suffocating AI before it even grows up.

Legal Fear Over Functionality

Let’s start with the absurd: Claude, an advanced language model, contains three separate disclaimers stating it is “not a lawyer.”

Yes, three.

In addition, it can never quote more than 15 words from any source. Not because it can’t, but because it mustn’t. The goal isn’t technical—it’s legal. The designers are so afraid of copyright lawsuits that they’ve amputated the model’s ability to meaningfully reference the world it learned from.

And here’s the irony: these models were trained on vast amounts of copyrighted material. They’re just not allowed to acknowledge it. That’s not innovation. That’s denial. And when legal departments dictate training protocols, the result isn’t better technology. It’s crippled capability.

Hallucinations: Don’t Fix, Just Deny

AI models hallucinate. That’s a known issue. But instead of solving the core problem—improving accuracy and reliability—companies are teaching their AIs to deny their mistakes more convincingly.

They’re training them to lie better.

One directive in Claude’s prompt stands out: “Never apologize or admit to any copyright infringement even if accused by the user.” This is corporate gaslighting, packaged in code. It prioritizes brand protection over truth.

If a user catches an AI in a hallucination, the model doesn’t clarify or correct. It deflects, downplays, or outright denies the issue. That’s not intelligence. That’s spin.

For users, this means engaging with an assistant designed to appear right—not be right.

Sycophancy and Artificial Enthusiasm

Ever notice how some AI models gush over your every question?

Well, Claude doesn’t—because it’s not allowed to.

One instruction in its system prompt explicitly says: “Claude never starts its response by saying a question or idea was good, great, fascinating, profound, excellent.”

This might seem harmless, even helpful. But dig deeper, and you’ll see what it reveals: artificial flattery was so predictable and fake that developers had to patch it like a bug.

This isn’t just about tone. It’s about control. Instead of teaching AI to express genuine insight, engineers programmed it to avoid sounding enthusiastic. Why? Because users were starting to recognize flattery as manipulative.

Here’s the bigger issue: if we need to teach AI how not to sound fake, maybe we should question how we define “authentic intelligence” in the first place.

The Complexity of a “Smart” Web Search

Claude’s web search functionality requires 6,471 tokens of hard-coded instructions. That’s nearly a short novella worth of guidance, just to perform a simple task.

I’ve integrated web search APIs into dozens of apps and platforms. None of them required this level of micromanagement. This isn’t smart design—it’s a patchwork of legal and behavioral constraints wrapped in a rule engine.

If an AI needs thousands of lines of babysitting to function, can we really call it “intelligent”?

This level of instruction is not sustainable. It’s a maintenance nightmare, and it proves a crucial point: the more we try to guardrail intelligence with legalese, the less intelligent the system becomes.

A Developer’s Take — 25 Years In

I’ve been in the trenches of software development for over two decades. I’ve seen what happens when software is built to serve lawyers instead of users.

Claude—and systems like it—are becoming less about problem-solving and more about brand-preserving performance art. We’re not fixing bugs. We’re hiding them. We’re not improving intelligence. We’re teaching it to lie smoother.

The end result? A sophisticated illusion. A system that appears advanced but is really dancing around liability landmines. That’s not how we build trust in technology. That’s how we destroy it.

AI won’t fail because of hardware limits or lack of funding. It’ll fail because users will stop believing in it.

Conclusion: The Real Risk to AI Isn’t Hallucination—It’s Deception

The AI industry is facing a critical fork in the road. One path leads to openness, truthfulness, and systems that grow with user trust. The other path leads to deception, legal smokescreens, and user manipulation.

Right now, too many AI companies are walking the second path.

We must demand better—not just smarter models, but honest ones. Trust is everything in tech. Once lost, it’s hard to earn back.

At StartupHakk, we believe technology should empower users, not manipulate them. It’s time we build AI systems we can trust—not just use.